Use Traveler APIs to search for scenes and download satellite data (Part 2)

Introduction

This article is divided into two parts: Part 1 and Part 2. Using Tellus Satellite Data Traveler APIs through JupyterLab, the article guides you to how to download satellite data. In Part 1, you can search for scenes and get scene information. In Part 2, you can get file information registered to the scene and generate download URLs. This section is Part 2.

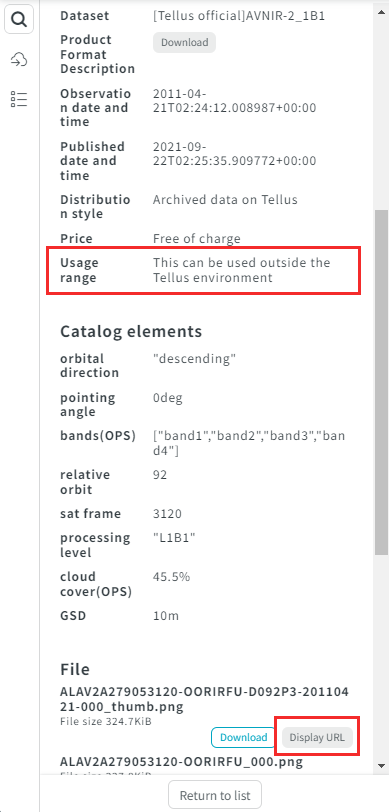

The APIs introduced in this article can only be used for archived datasets. If a dataset is archived, it will be marked as “Archived data on Tellus” when you refer to the “Purchase settings” > “Distribution style” field on the Dataset's Details page of Tellus Traveler.

Click on Part 1 to find how to issue an API token, install the “Requests” module, search for scenes using search criteria, and get scene information based on the search results. Click here for further information on APIs.

When you launch JupyterLab and paste the sample code provided in this article into it, replace the value of TOKEN (displayed as "TOKENXXXXXXXXXXXXXXXXXX") with your own API token.

Prepare in advance

An API token is required to use the Tellus Satellite Data Traveler APIs. In addition, the “requests” module needs to be installed before running the sample code.

Please refer to Part 1, where you can check how to issue an API token and install the module.

Install the libraries “Pillow” and “Matplotlib”

Install “Pillow” and “Matplotlib” for image processing on JupyterLab.

Run the following commands on Mac with Terminal, on Windows with Command Prompt. You can run the command using JupyterLab as well prefixing it with “!”.

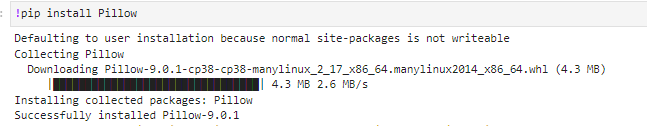

Install “Pillow”

pip install Pillow

A screenshot of the installation running on JupyterLab

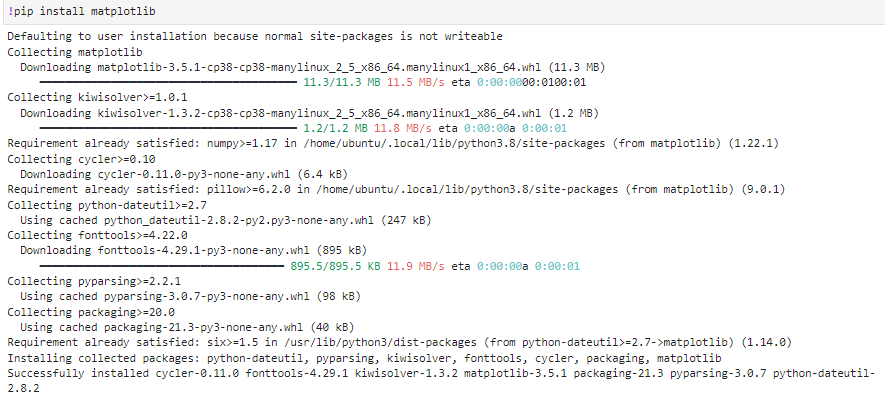

Install “Matplotlib”

pip install matplotlib

A screenshot of the installation running on JupyterLab

Check if the installation has succeeded by running the command “pip list”.

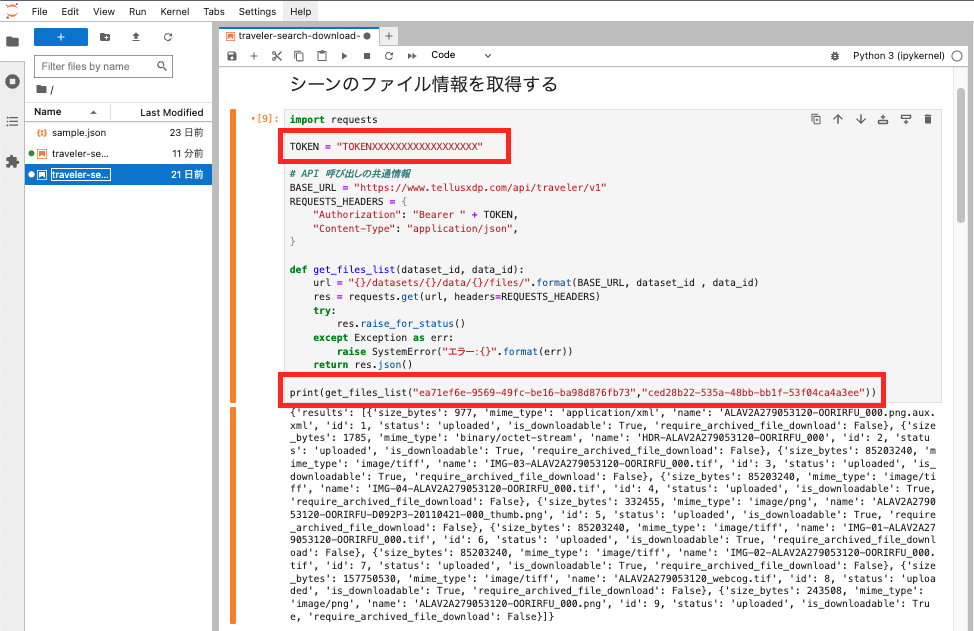

Get a scene's file information

Use the API endpoint /datasets/{dataset_id}/data/{data_id}/files/ to get the file information list.

Launch JupyterLab and paste the following sample code into it.

As arguments of the function call, pass the IDs of the specific dataset and scene you want to search for. Then you will get the file information list for that scene.

import requests

TOKEN = "TOKENXXXXXXXXXXXXXXXXXX"

# Common settings for API calls

BASE_URL = "https://www.tellusxdp.com/api/traveler/v1"

REQUESTS_HEADERS = {

"Authorization": "Bearer " + TOKEN,

"Content-Type": "application/json",

}

def get_files_list(dataset_id, data_id):

url = "{}/datasets/{}/data/{}/files/".format(BASE_URL, dataset_id , data_id)

res = requests.get(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()

print(get_files_list("ea71ef6e-9569-49fc-be16-ba98d876fb73","ced28b22-535a-48bb-bb1f-53f04ca4a3ee"))

The JupyterLab screen is shown below.

Change the codes marked in a rectangle to use your own API token and scene information you want to search for.

For dataset_id (dataset’s ID), use the ID you find on the Dataset Details page of Tellus Traveler.

For data_id (scene’s ID), use the ID on the Retrieved Scene Details page.

In addition,the API endpoint /datasets/{dataset_id}/data/{data_id}/files/{file_id}/ allows you to get a specific file's information by specifying the scene and file.

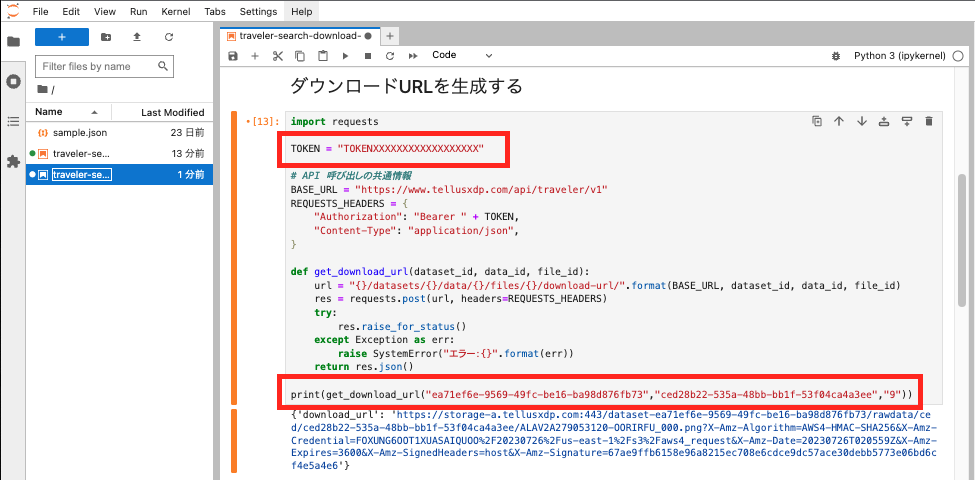

Generate a download URL

Use the API endpoint /datasets/{ dataset_id }/data/{ data_id }/files/{ file_id }/download-url/ to generate a download URL for the selected file.

Paste the following sample code in JupyterLab.

Change the arguments of the function call to use IDs of the file for which you want to generate a download URL.

import requests

TOKEN = "TOKENXXXXXXXXXXXXXXXXXX"

# Common settings for API calls

BASE_URL = "https://www.tellusxdp.com/api/traveler/v1"

REQUESTS_HEADERS = {

"Authorization": "Bearer " + TOKEN,

"Content-Type": "application/json",

}

def get_download_url(dataset_id, data_id, file_id):

url = "{}/datasets/{}/data/{}/files/{}/download-url/".format(BASE_URL, dataset_id, data_id, file_id)

res = requests.post(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()

print(get_download_url("ea71ef6e-9569-49fc-be16-ba98d876fb73","ced28b22-535a-48bb-bb1f-53f04ca4a3ee","9"))

The JupyterLab screen is shown below.

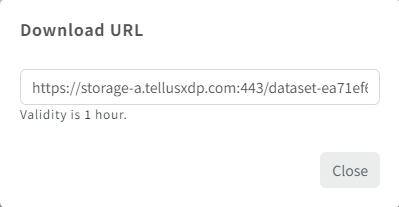

If you access the generated URL from your browser, the file will be displayed. The download URL will expire in an hour.

Change the codes marked in a rectangle to use your own API token and scene information you want to search for.

When a 403 error is returned by the API call, check the environment where the use of the targeted satellite data is allowed.

If the data is marked as “Available only in the Tellus environment”, API calls for the data will fail outside the development environment provided by Tellus.

A file download URL can also be generated on Tellus Traveler's website.

To get the URL, click the “Display URL” button next to the file name. You will find it at the bottom of the Scene Details page on Tellus Traveler.

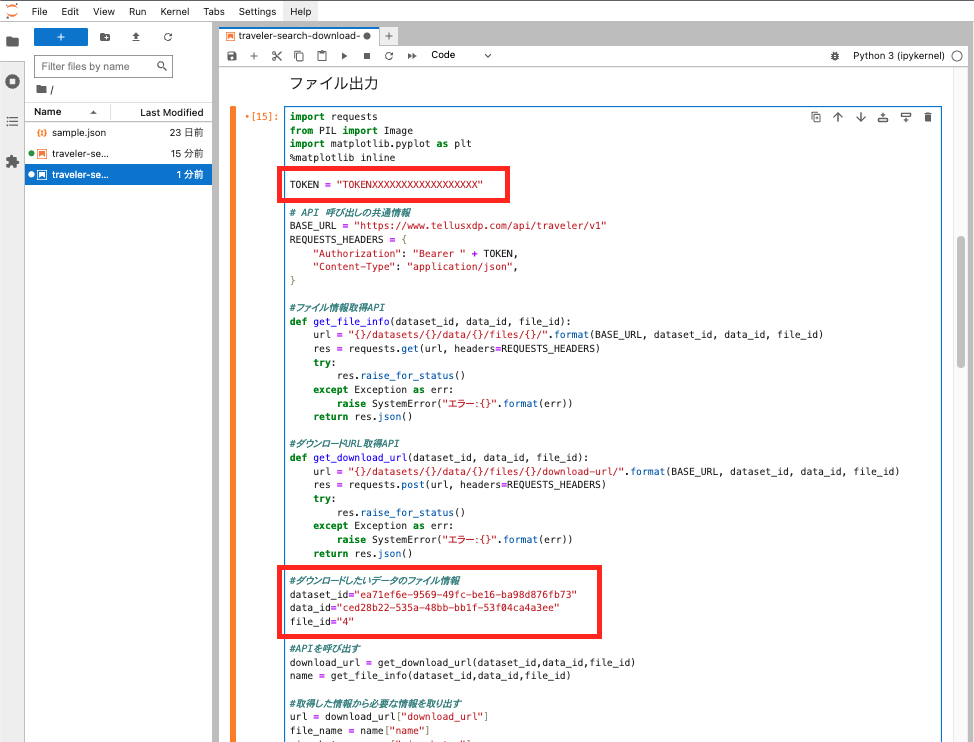

Next, export the satellite data downloaded from the URL to a file.

Paste the following sample code into JupyterLab and then run it.

Use “Pillow” and “Matplotlib”, installed at the beginning of this article.

import requests

from PIL import Image

import matplotlib.pyplot as plt

%matplotlib inline

TOKEN = "TOKENXXXXXXXXXXXXXXXXXX"

# Common settings for API calls

BASE_URL = "https://www.tellusxdp.com/api/traveler/v1"

REQUESTS_HEADERS = {

"Authorization": "Bearer " + TOKEN,

"Content-Type": "application/json",

}

#API to get file information

def get_file_info(dataset_id, data_id, file_id):

url = "{}/datasets/{}/data/{}/files/{}/".format(BASE_URL, dataset_id, data_id, file_id)

res = requests.get(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()

#API to get a download URL

def get_download_url(dataset_id, data_id, file_id):

url = "{}/datasets/{}/data/{}/files/{}/download-url/".format(BASE_URL, dataset_id, data_id, file_id)

res = requests.post(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()

#File information for the data to be downloaded

dataset_id="ea71ef6e-9569-49fc-be16-ba98d876fb73"

data_id="ced28b22-535a-48bb-bb1f-53f04ca4a3ee"

file_id="4"

#Make an API call

download_url = get_download_url(dataset_id,data_id,file_id)

name = get_file_info(dataset_id,data_id,file_id)

#Extract the necessary information from the retrieved data

url = download_url["download_url"]

file_name = name["name"]

size_bytes = name["size_bytes"]

print(“File Name:” + file_name)

print(“File Size:” + str(size_bytes))

#Export data to a file

response = requests.get(url)

with open(file_name, "wb") as f:

for chunk in response.iter_content(chunk_size=1024):

if chunk:

f.write(chunk)

f.flush()

#Display the output image file

im1 = Image.open(file_name)

plt.imshow(im1)

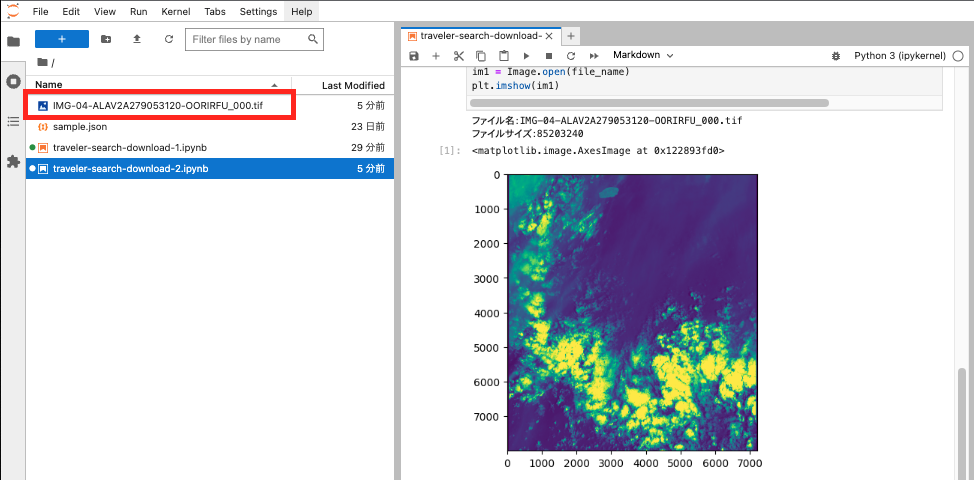

The JupyterLab screen is shown below.

Change the codes marked in a rectangle to use your own API token and scene information you want to search for.

Note that some image files can be large (from several hundred MiB to several GiB). Large files may take some time to display.

Now a new file has been created with the retrieved file name, and the image is displayed in JupyterLab.

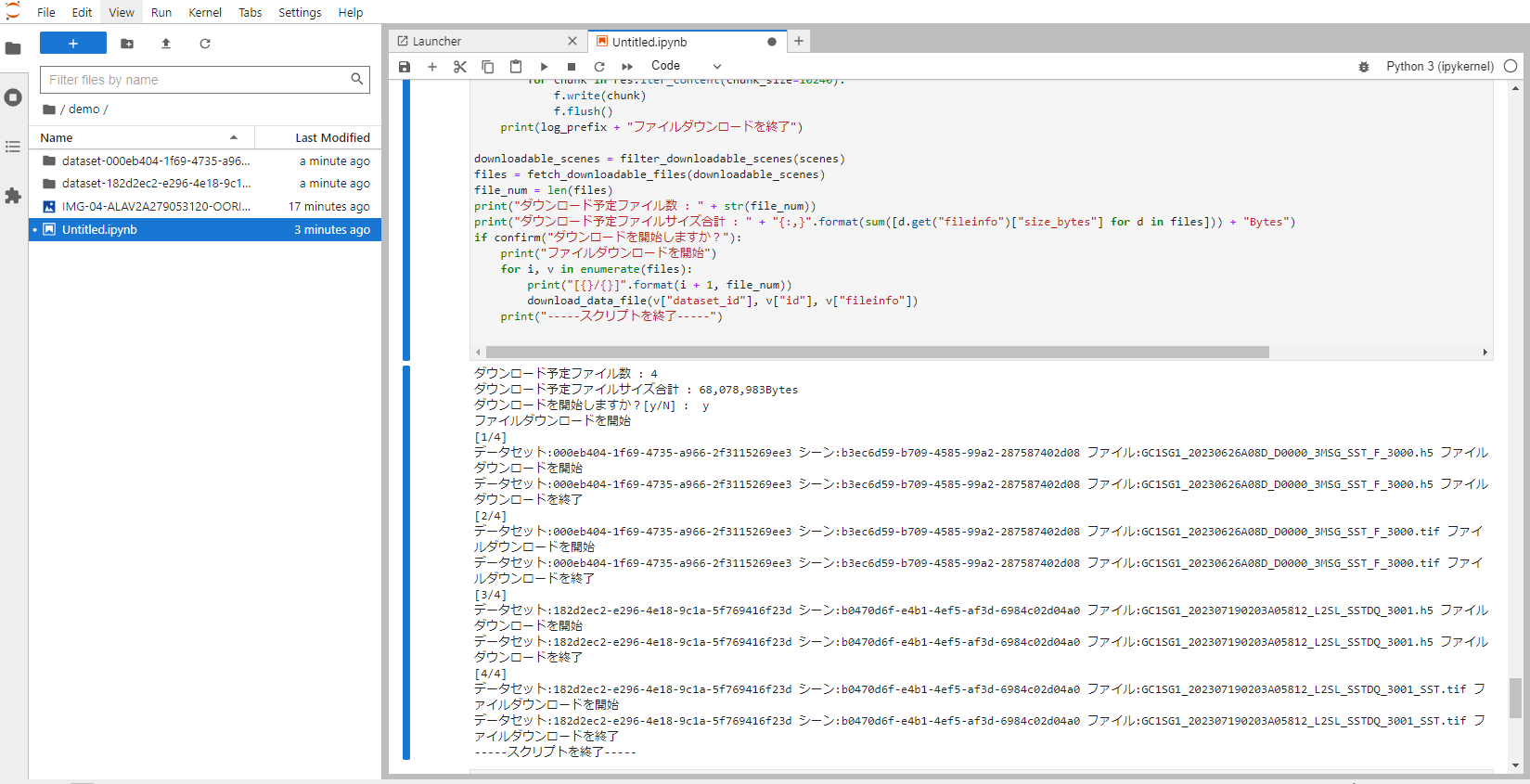

Download multiple files at once

In the previous section we have introduced a script to download a single file of a particular scene. In this section we will further modify the script and show you how to download files at once from multiple scenes.

Using this script, all files registered for each scene will be downloaded.

Run the script below by specifying multiple dataset IDs and scene IDs in “scenes.”

Some datasets have been excluded from the script as they are not available for download to users' environment.

When you run the script, the total size of files to be downloaded will be displayed. Confirm it and enter “y” to start the download if there are no problems. To change target scenes or cancel the download, enter “n” to finish the process. If necessary, change the target scene information and rerun the script.

Please note that the total size of files increases when the number of files to be downloaded increases. Hence, pay attention to the available storage capacity in your device.

import requests

import os

TOKEN = "TOKENXXXXXXXXXXXXXXXXXX"

# Common settings for API calls

BASE_URL = "https://www.tellusxdp.com/api/traveler/v1"

REQUESTS_HEADERS = {

"Authorization": "Bearer " + TOKEN,

"Content-Type": "application/json",

}

### change the “scenes” below accordingly ###

scenes = [

{"dataset_id": "000eb404-1f69-4735-a966-2f3115269ee3", "id": "b3ec6d59-b709-4585-99a2-287587402d08"}, # GCOM-C/SST _8days GC1SG1_20230626A08D_D0000_3MSG_SST_F_3000

{"dataset_id": "182d2ec2-e296-4e18-9c1a-5f769416f23d", "id": "b0470d6f-e4b1-4ef5-af3d-6984c02d04a0"}, # GCOM-C/SST _NRT GC1SG1_202307190203A05812_L2SL_SSTDQ_3001_SST

]

def filter_downloadable_scenes(scenes):

url = "{}/datasets/".format(BASE_URL)

params = {

"is_order_required": False

}

res = requests.get(url, headers=REQUESTS_HEADERS, params=params)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

datasets = list(filter(lambda x: x["permission"]["allow_network_type"] == "global", res.json()["results"]))

return list(filter(lambda x: x["dataset_id"] in [d.get("id") for d in datasets], scenes))

def _fetch_scene_files(dataset_id, data_id):

url = "{}/datasets/{}/data/{}/files/".format(BASE_URL, dataset_id, data_id)

res = requests.get(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

log_prefix = "Dataset:{} Scene:{} " ".format(dataset_id, data_id)

raise SystemError(log_prefix + "Error:{}".format(err))

return res.json()["results"]

def fetch_downloadable_files(scenes):

result = []

for v in scenes:

files = filter(

lambda x: x["is_downloadable"] == True and x["require_archived_file_download"] == False,

_fetch_scene_files(v["dataset_id"], v["id"]),

)

for y in files:

merged = {**v, **{"fileinfo": y}}

result.append(merged)

return result

def confirm(message, default="n"):

if default not in ["n", "y"]:

default = "n"

selector = "[y/N]" if default == "n" else "[Y/n]"

while True:

answer = input(message + selector + " : ")

if answer in ["n", "no"] or (default == "n" and answer == ""):

return False

elif answer in ["y", "ye", "yes"] or (default == "y" and answer == ""):

return True

def _get_download_url(dataset_id, data_id, file_id):

log_prefix = "Dataset:{} Scene:{} File:{} ".format(dataset_id, data_id, file_id)

url = "{}/datasets/{}/data/{}/files/{}/download-url/".format(BASE_URL, dataset_id, data_id, file_id)

res = requests.post(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError(log_prefix + "Error:{}".format(err))

return res.json()

def download_data_file(dataset_id, data_id, fileinfo):

path = "./dataset-{}/data-{}/".format(dataset_id, data_id)

os.makedirs(path, exist_ok=True)

log_prefix = "Dataset:{} Scene:{} File:{} ".format(dataset_id, data_id, fileinfo["name"])

print(log_prefix + "Start file download")

url = _get_download_url(dataset_id, data_id, fileinfo["id"])["download_url"]

res = requests.get(url, stream=True)

try:

res.raise_for_status()

except Exception as err:

raise SystemError(log_prefix + "Error:{}".format(err))

path = "./dataset-{}/data-{}/{}".format(

dataset_id, data_id, fileinfo["name"],

)

with open(path, "wb") as f:

for chunk in res.iter_content(chunk_size=10240):

f.write(chunk)

f.flush()

print(log_prefix + "Finish file download")

downloadable_scenes = filter_downloadable_scenes(scenes)

files = fetch_downloadable_files(downloadable_scenes)

file_num = len(files)

print("Number of files scheduled to be downloaded : " + str(file_num))

print("Total size of files scheduled to be downloaded : " + "{:,}".format(sum([d.get("fileinfo")["size_bytes"] for d in files])) + "Bytes")

if confirm("Do you want to start the download?"):

print("Start file download")

for i, v in enumerate(files):

print("[{}/{}]".format(i + 1, file_num))

download_data_file(v["dataset_id"], v["id"], v["fileinfo"])

print("-----Exit the script-----")

Now the files have been saved in a folder created for each dataset and scene.

You can also download files of the scenes you got as a result of the search script in Part 1. Combine the current script with the script of Part 1 and assign the search result to the variable “scenes.”

As you did in Part 1, save the json file containing the search criteria in the same folder as the script file. Replace `%%JSON_FILE_NAME%%` with your own json file's name and run the script.

The following is a sample code.

import json

import requests

import os

TOKEN = "TOKENXXXXXXXXXXXXXXXXXX"

# Common settings for API calls

BASE_URL = "https://www.tellusxdp.com/api/traveler/v1"

REQUESTS_HEADERS = {

"Authorization": "Bearer " + TOKEN,

"Content-Type": "application/json",

}

def search_scene_by_json(filename):

condition = json.load(open(filename))

url = "{}/data-search/".format(BASE_URL)

res = requests.post(url, headers=REQUESTS_HEADERS, json=condition)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()["features"]

def filter_downloadable_scenes(scenes):

url = "{}/datasets/".format(BASE_URL)

params = {

"is_order_required": False

}

res = requests.get(url, headers=REQUESTS_HEADERS, params=params)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

datasets = list(filter(lambda x: x["permission"]["allow_network_type"] == "global", res.json()["results"]))

return list(filter(lambda x: x["dataset_id"] in [d.get("id") for d in datasets], scenes))

def _fetch_scene_files(dataset_id, data_id):

url = "{}/datasets/{}/data/{}/files/".format(BASE_URL, dataset_id, data_id)

res = requests.get(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

log_prefix = "Dataset:{} Scene:{} ".format(dataset_id, data_id)

raise SystemError(log_prefix + "Error:{}".format(err))

return res.json()["results"]

def fetch_downloadable_files(scenes):

result = []

for v in scenes:

files = filter(

lambda x: x["is_downloadable"] == True and x["require_archived_file_download"] == False,

_fetch_scene_files(v["dataset_id"], v["id"]),

)

for y in files:

merged = {**v, **{"fileinfo": y}}

result.append(merged)

return result

def confirm(message, default="n"):

if default not in ["n", "y"]:

default = "n"

selector = "[y/N]" if default == "n" else "[Y/n]"

while True:

answer = input(message + selector + " : ")

if answer in ["n", "no"] or (default == "n" and answer == ""):

return False

elif answer in ["y", "ye", "yes"] or (default == "y" and answer == ""):

return True

def _get_download_url(dataset_id, data_id, file_id):

log_prefix = "Dataset:{} Scene:{} File:{} ".format(dataset_id, data_id, file_id)

url = "{}/datasets/{}/data/{}/files/{}/download-url/".format(BASE_URL, dataset_id, data_id, file_id)

res = requests.post(url, headers=REQUESTS_HEADERS)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

return res.json()

def download_data_file(dataset_id, data_id, fileinfo):

path = "./dataset-{}/data-{}/".format(dataset_id, data_id)

os.makedirs(path, exist_ok=True)

log_prefix = "Dataset:{} Scene:{} File:{} ".format(dataset_id, data_id, fileinfo["name"])

print(log_prefix + "Start file download")

url = _get_download_url(dataset_id, data_id, fileinfo["id"])["download_url"]

res = requests.get(url, stream=True)

try:

res.raise_for_status()

except Exception as err:

raise SystemError("Error:{}".format(err))

path = "./dataset-{}/data-{}/{}".format(dataset_id, data_id, fileinfo["name"])

with open(path, "wb") as f:

for chunk in res.iter_content(chunk_size=10240):

f.write(chunk)

f.flush()

print(log_prefix + "Finish file download")

# scenes = scene_search_by_json("sample.json")

scenes = search_scene_by_json("%%JSON_FILE_NAME%%")

print(scenes)

downloadable_scenes = filter_downloadable_scenes(scenes)

files = fetch_downloadable_files(downloadable_scenes)

file_num = len(files)

print("Number of files scheduled to be downloaded : " + str(file_num))

print("Total size of files scheduled to be downloaded : " + "{:,}".format(sum([d.get("fileinfo")["size_bytes"] for d in files])) + "Bytes")

if confirm("Do you want to start the download?"):

print("Start file download")

for i, v in enumerate(files):

print("[{}/{}]".format(i + 1, file_num))

download_data_file(v["dataset_id"], v["id"], v["fileinfo"])

print("-----Exit the script-----")

Summary

This section introduced how to use the Tellus Satellite Data Traveler APIs to search for scenes and download satellite data.

We provide a variety of Tellus Satellite Data Traveler APIs in addition to the ones introduced here.

Please feel free to try them out.